Almost without us noticing, personalisation has become a part of everyday life. We have never had access to more content, but increasingly our online world is becoming more individualised and streamlined. From suggested purchases to customised music playlists and recommendations on what to watch, personalisation algorithms are omnipresent.

Given the volume of choice available, it can be eerily accurate and comforting to be presented with content tailored to our interests. But personalising the news, while largely necessary to survive in the current digital ecosystem, raises ethical and editorial challenges.

Public interest journalism plays a vital role in a democratic society by informing citizens, holding power to account, and fostering public debate. However, these often-depressing stories on fatigued and complicated topics can struggle to achieve the same level of engagement as other, more fun content.

This discrepancy raises the question: if users are increasingly exposed to content that aligns with their existing views and interests, how can we ensure that important, albeit less popular, stories reach a wide audience? More broadly: how do we balance technological advancement with journalistic integrity and user trust?

To help answer those questions, I spent time examining several approaches to personalisation, and reviewed the latest academic literature on news recommender systems. I also interviewed technical and editorial personalisation experts at publishers in Sweden, India, Canada, UK, and Australia, and spoke with leading figures in machine learning and academics on this topic.

Why personalise the news?

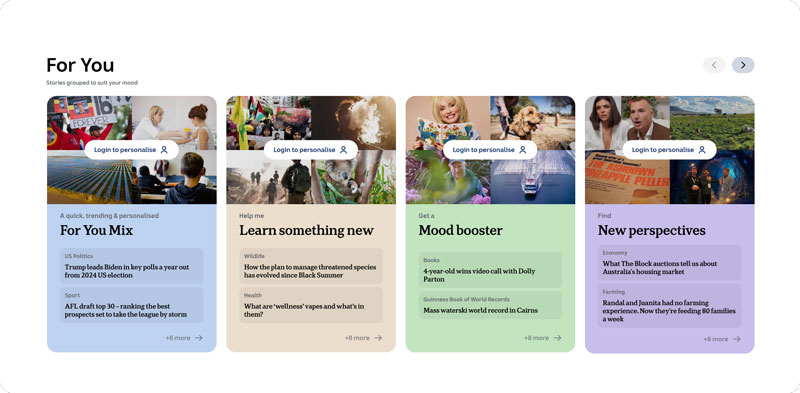

News recommender systems (NRS) deliver undiscovered content to their intended audience more efficiently and effectively. This efficiency can increase the reach of content and yield a better audience experience, all contributing to a better bottom line. However, there is concern that less popular but important information may not surface as well, or readers may be siloed by their interests. There is no one-size-fits-all approach for news personalisation, but most companies use a human-in-the-loop approach.

Many news publishers produce vast amounts of content, much of which remains undiscovered by the public. NRS can counter this by providing relevant and valuable content, generating traffic, retaining subscribers, and engaging audiences more effectively than a human editor. By delivering relevant content on their own platforms rather than through third parties, news organisations can support their financial sustainability, which is also crucial for public interest journalism.

Differing approaches to personalisation

Over the course of this project research, it became clear that no two media companies approach personalisation in the same way. It largely depends on an organisation’s appetite for automation, innovation, reputational risk, and computational resources. For instance, Sky News UK has no personalisation on its homepage, whereas Canada’s The Globe and Mail is almost entirely algorithm-driven.

Sonali Verma, who led personalisation at The Globe and Mail, highlights the importance of maintaining editorial curation alongside algorithmic recommendations. The top three slots on The Globe and Mail’s homepage are always chosen by editors to highlight the most important stories, reflecting the newspaper’s role in setting the national agenda.

“Most news publishers produce a tonne of content that the public has no interest in and will never discover. So, if you can focus your resources on stuff that really matters and make it easy for people to find it, then you’re doing your news organisation a service and you’re building a better society,” Verma told me.

Integrating public interest values

Striking the right balance between algorithm-driven personalisation and editorial judgement is crucial for maintaining the integrity and reach of public interest journalism. While algorithms can identify and recommend content based on user behaviour and preferences, homepage editors play a crucial role in ensuring that significant public interest stories are given prominence.

But algorithms can also promote and protect public interest journalism if they are designed with values-based parameters and not just user engagement metrics. Sveriges Radio (SR) in Sweden developed a “Public Service Algorithm” integrating key values such as stories recorded on location, including affected voices, providing new perspectives, and offering independent viewpoints. This method has been well-received within their newsrooms and is seen as a model for integrating AI in public service journalism in the industry.

Professor of Machine Learning Michael A. Osborne from the University of Oxford said that defining the “reward function” or “loss function” within machine learning models is critical. “If your platform is only targeting a click-through rate or something similar, it’s likely that those public interest groups will be dismissed relative to more popular stories,” he said. “To surface the public interest stories, you need to give more thought into exactly what you want the algorithm to do. What is the goal exactly? And how can you bring your users on board with that goal?”

Implementation challenges

A 2023 study by Anna Schjøtt Hansen and Professor Jannie Møller Hartley highlighted the difficulties in integrating a personalisation algorithm with traditional news values. They recorded concerns about loss of editorial control as editors and journalists at the news organisation initially struggled to integrate traditional news values into the algorithm.

As I discovered, the project they were tracking, in a Danish newsroom, eventually failed. They said the struggle they witnessed emphasizes the need for effective communication and collaboration between data scientists, editorial, and IT teams.

Professor Hartley told me: “Some organisations say, ‘OK, there will be resistance, so we don’t involve [the newsroom] until it’s ready to press the button’. And then there are the others who get that because of that resistance, we need to have [the newsroom[ onboard from the very beginning, and also have them co-design.”

Anyone attempting to avoid a similar fate for their project should first tackle the breakdown of newsroom silos, then develop and share a comprehensive strategy and seek feedback across the newsroom. Next, ensure alignment on values between the tech and editorial teams, and finally anticipate and mitigate against IT challenges like slow servers, poor tagging, or faulty data.

Even at SR, I was told some digital staff feel constrained by the algorithm, seeing it as a “straitjacket” that limits their ability to manually curate news stories.

Some digital editors try to bypass the algorithm’s constraints by using manual workarounds. For instance, they might pin top stories or alter publication times to ensure certain stories get more visibility. This indicates a struggle between adhering to the automated system and maintaining editorial flexibility.

Conclusion

Integrating editorial judgement in the development of more advanced personalisation algorithms shows promise, as proven by several media organisations profiled here. Models like SR’s “Public Service Algorithm” and human-in-the-loop approaches illustrate how personalisation can align with journalistic values. A 2024 systematic literature review of value-aware news recommender systems found those which prioritise diversity, recency, privacy, and transparency highlight the path forward for ethically responsible personalisation.

Protecting public interest journalism while personalising the news is not just a technical challenge but an ethical one. It requires thoughtful integration of technology with sound editorial judgement and clear principles. If we get it right, and we must for the sake of public interest journalism, there are many rewards to reap.

For a deeper dive into these findings and insights – including a look towards the future of personalisation in the context of generative AI, download the full paper below